Good to see the effort you put in learning. I assure you that these concepts are really not hard to understand.

In this lesson, I would explain the concept of Partial F-Test in Multivariate Linear Regression. I assume that you have a basic knowlege of Regression, so we would begin with a recap of Multivariate Linear Regression.

I would try to keep it very simple and clear.

Content

- What is Multivariate Linear Regression?

- What is Partial F-Test?

- How it Works

- The Fchange Statistic

- Automated Modelling Algorithms

- Final Notes

- Watch the video

1. What is Multivariate Linear Regression?

Remember that Regression has to do with trying to find a relationship between the one variable called the dependent(or target) variable and the independent variable (or explanatory variable).

The typical equation of a regression, is the slope of the linear regression line given by:

where y is the dependent variable

x is the independent variable

In case of multivariate linear regression, the dependent variable y depends on two or more independent variables x1, x2, …, xn

The equation for multivariate linear regression is given as:

y = β0 + β1x1 + … + βkxk + Ɛ

where x1, x2,…xn are the independent variables

y is the dependent variable

β0, β1,…βn are the regression coefficients

Ɛ is the error term

2. What is Partial F-Test?

Partial F-Test is a statistical analysis used in multivariate linear regression to determine independent variables are to be considered when fitting a multivariate linear regression model. In order words, how many variables are to be considered to create a good fit.

This is necessary because if too many variables are considered, then the model would be too complex. On the other hand, if too few variables are used, then we may get a very weak fit.

3. How it works

Let’s assume that we have created a multivariate regression model between the dependent variable y and the independent variables x1, x2,…xf.

Now we would like to improve the model by adding additional independent variables xf+1, xf+2,…xk.

The question would be if continuously adding these additional variable would improve the model or not.

So we are going to perform a Partial F-Test to determine this and we need to calculate the Fchange statistic.

Learn about Overfitting and Underfitting here.

4. The Fchange Statistic

In this session, I’m going to explain how to carry out the partial F-Test.

The first step is to assume a that the the model would not be improved by adding new independent variables to the model. This assumption would be out null hypothesis.This would also mean that the coefficients of terms in the regression model containing the additional variables would be equal to zero.

So, first, we can state the null hypothesis this way:

H0 : βf+1 = βf+2 = … = βk = 0 (additional new variables does not improve the model)

This is our null hypothesis

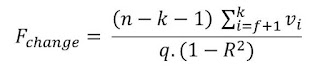

The next step is to calculate the Fchange statistics

where

n-k-1 is the degrees of freedom

vi is part of the correlation coefficient of the ith variable

q is the number of variable left out (q = k – f)

If the calculated Fchange follows an F distribution with q and n-1-k degrees of freedom if the null hypothesis is true.

If the level of significance is high enough, then we can accept the null hypothesis that the additional variables does not add any improvement to the model.

However if the level of significance is close to 0, then additional variables needs to be included in the model

5. Automated Modelling Algorithms

Just as you may have figured out, it would be very difficult to perform the Partial F-Test manually. You’re right!. This is especially true if the number of independent variables being considered is much. So there are automated methods that can be used to produce a good fit.

How they work: These algorithms sort out the list of independent variables in the final model according to different variables in the final model according to different strategies.

(a) Foward Selection

This algorithm follows two steps

Step 1: In this step, a list of independent variables are selected that have highest correlation coefficients in the absolute value with the dependent variable. Then calculate the F-statistic for linear regression with this variable to see if a strong linear relationship has resulted in a measurable fitting. If the test is significant, then then the algorithm stops. Otherwise, continue to Step 2

Step 2: Take the next variable that have the highest partial correlation coefficient among the residues with the dependent variable. Also calculate the F-statistic with this new variable. If the calculated value is larger than a required set value, then we take another variable and repeat Step 2. Otherwise the algorithm stops.

(b) Backward Elimination

This algorithm is the opposite of the Foward Selection procedure.

In this case, we begin by including all the variables from the start into the regression model. Then we select the least suitable ones.

Beginning wiht the smallest variable, we examine beta coefficient variable and the F-statistic of the reduced model.

F must be larger than the required set minimum value. If after the reduction step, the criteria is no longer reached,, then the reduced model will be the final result

Step-wise Selection

This algorithm tends to combine the features of the Forward Selection and Backward Elimination. It repeatedly adds or removed variables from the list of independent variables. So the algorithm terminates either based on a minimum or a maximum set threshold.

If the F-statistic or the significance level is exited from the interval, the the algorithm stops.

6. Final Notes

I would make a video explanation of the Partial F-Test in Multivariate Linear Regression. Also check my Channel for Lessons of Introduction to Linear Regression and Introduction Multivariate Linear Regression as well.

If you have any challenges following this lesson let me know in the comment box below or by the left of this page.

Thank you for reading and well-done for your efforts in learning Partial F-Test in Multivariate Linear Regression Analysis.

Watch Linear Regression Video lesson here